Antsle Forum

Welcome to our Antsle community! This forum is to connect all Antsle users to post experiences, make user-generated content available for the entire community and more.

Please note: This forum is about discussing one specific issue at a time. No generalizations. No judgments. Please check the Forum Rules before posting. If you have specific questions about your Antsle and expect a response from our team directly, please continue to use the appropriate channels (email: [email protected]) so every inquiry is tracked.

Main drive died, trying to recover antlets from ZPOOL mirror

Quote from SomeWhoCallMeTim on November 8, 2022, 6:00 amHi all,

Upfront confession...I am NOT a linux person by any stretch of the imagination.

We had our SSD die over the weekend, but the secondary/mirror drive seems to be fine.

Following some helpful instructions on the forum, we have replaced the dead drive, loaded CentOS and have AntMan up and running. There is a new and healthy antlet (named antlet) on the new drive with the active OS.

The second drive is attached, and I can see that there is an existing antlet (which we managed to rename to antlet-old to avoid confusion). The antlet-old shows up in AntMan as 72% full, so I'm taking that as a good sign that there is data to be recovered somewhere.

The ZPOOL mirror status shows the degraded status, but I can't figure out how to tell the antlet-old to put its data into antlet on the new drive to rebuild the mirror, and bring everything back to life.

Results of a couple commands to the ZPOOL listed below.

Any help would be greatly appreciated!

root@carantsle1:~ # zpool listNAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOTantlets 456G 47.6G 408G - - 0% 10% 1.00x ONLINE -antlets-old 448G 327G 121G - - 0% 72% 1.00x DEGRADED -root@carantsle1:~ # zpool statuspool: antletsstate: ONLINEscan: none requestedconfig:NAME STATE READ WRITE CKSUMantlets ONLINE 0 0 0sda4 ONLINE 0 0 0errors: No known data errorspool: antlets-oldstate: DEGRADEDstatus: One or more devices could not be used because the label is missing orinvalid. Sufficient replicas exist for the pool to continuefunctioning in a degraded state.action: Replace the device using 'zpool replace'.scan: none requestedconfig:NAME STATE READ WRITE CKSUMantlets-old DEGRADED 0 0 0mirror-0 DEGRADED 0 0 05244363799507771969 FAULTED 0 0 0 was /dev/sda4sdb2 ONLINE 0 0 0errors: No known data errors***The faulted UID is NOT the UID of the antlet on the hard drive that is still functional from the original setup. See results of lsblk -f below.root@carantsle1:~ # lsblk -fNAME FSTYPE LABEL UUID MOUNTPOINTsda zfs_member antlets 2226498227691933255├─sda1 vfat antlets B315-52F5 /boot/efi├─sda2 xfs antlets ba0f9242-a78d-41eb-80c2-a30bda59c3e0 /boot├─sda3 xfs antlets 5bdaeba9-0b47-4920-bdc2-f48fb5036cf2 /└─sda4 zfs_member antlets 2226498227691933255sdb├─sdb1 swap d5a07a24-a407-43c0-b7ab-9b9b2ad0e6ed└─sdb2 zfs_member antlets 475084199677983392***sdb is drive holding data we are after. UID ending in 3392 is what we want to retain and use.

Hi all,

Upfront confession...I am NOT a linux person by any stretch of the imagination.

We had our SSD die over the weekend, but the secondary/mirror drive seems to be fine.

Following some helpful instructions on the forum, we have replaced the dead drive, loaded CentOS and have AntMan up and running. There is a new and healthy antlet (named antlet) on the new drive with the active OS.

The second drive is attached, and I can see that there is an existing antlet (which we managed to rename to antlet-old to avoid confusion). The antlet-old shows up in AntMan as 72% full, so I'm taking that as a good sign that there is data to be recovered somewhere.

The ZPOOL mirror status shows the degraded status, but I can't figure out how to tell the antlet-old to put its data into antlet on the new drive to rebuild the mirror, and bring everything back to life.

Results of a couple commands to the ZPOOL listed below.

Any help would be greatly appreciated!

Quote from daniel.luck on November 8, 2022, 7:38 pmHello @somewhocallmetim

Thanks for reaching out to antsle Support.

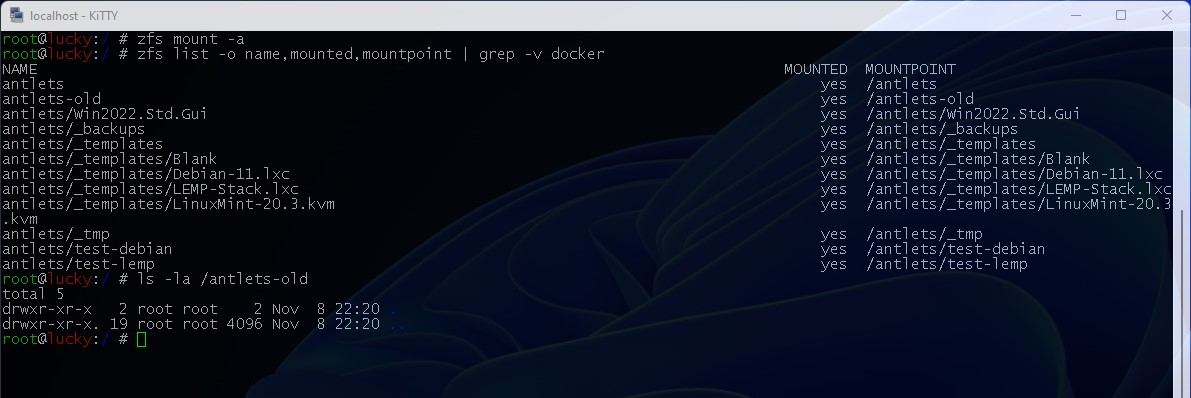

Here are some commands you can use to see if zpools are mounted:

zfs mount -a

zfs list -o name,mounted,mountpoint | grep -v docker

ls -la /antlets-oldIn my example, I created a blank zpool called "antlets-old" so there are no contents.

Thank you,

antsle Support

Hello @somewhocallmetim

Thanks for reaching out to antsle Support.

Here are some commands you can use to see if zpools are mounted:

zfs mount -a

zfs list -o name,mounted,mountpoint | grep -v docker

ls -la /antlets-old

In my example, I created a blank zpool called "antlets-old" so there are no contents.

Thank you,

antsle Support

Quote from SomeWhoCallMeTim on November 9, 2022, 5:09 amApparently somebody doesn't like me very much. Overnight, my secondary drive with all our antlets died.

Thankfully, we made a backup of it, and after a restoration, I have a secondary drive which shows the original antlets pool.

At this point, running zpool list gives a result of no pools available.

How do I get the AntMan to have a pool, and how do I get my old data into that pool and mirror?

root@carantsle1:~ # lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda zfs_member antlets 2226498227691933255

├─sda1 vfat antlets B315-52F5 /boot/efi

├─sda2 xfs antlets ba0f9242-a78d-41eb-80c2-a30bda59c3e0 /boot

├─sda3 xfs antlets 5bdaeba9-0b47-4920-bdc2-f48fb5036cf2 /

└─sda4 zfs_member antlets 2226498227691933255

sdb

├─sdb1

├─sdb2 swap d5a07a24-a407-43c0-b7ab-9b9b2ad0e6ed

└─sdb3 zfs_member antlets 475084199677983392root@carantsle1:~ # zpool status

no pools available

Apparently somebody doesn't like me very much. Overnight, my secondary drive with all our antlets died.

Thankfully, we made a backup of it, and after a restoration, I have a secondary drive which shows the original antlets pool.

At this point, running zpool list gives a result of no pools available.

How do I get the AntMan to have a pool, and how do I get my old data into that pool and mirror?

root@carantsle1:~ # lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda zfs_member antlets 2226498227691933255

├─sda1 vfat antlets B315-52F5 /boot/efi

├─sda2 xfs antlets ba0f9242-a78d-41eb-80c2-a30bda59c3e0 /boot

├─sda3 xfs antlets 5bdaeba9-0b47-4920-bdc2-f48fb5036cf2 /

└─sda4 zfs_member antlets 2226498227691933255

sdb

├─sdb1

├─sdb2 swap d5a07a24-a407-43c0-b7ab-9b9b2ad0e6ed

└─sdb3 zfs_member antlets 475084199677983392

root@carantsle1:~ # zpool status

no pools available

Quote from SomeWhoCallMeTim on November 9, 2022, 8:06 amHere is where I currently am: New OS showing the docker failed. Wound up rebuilding OS drive again.

Original antlets drive died, so I wound up recovering from a backup to a new drive. Name change of antlet is no longer in place.

I ran some commands below so you can see what I have. At this point, it looks like I just need to get the omt-antlets mirror rebuilt. It exsits on the sdb3 drive, and I want it to mirror into the sda5.

Here is what I tried, and the responses.

root@myantsle:~ # zpool import antlets

cannot import 'antlets': a pool with that name already exists

use the form 'zpool import <pool | id> <newpool>' to give it a new name

root@myantsle:~ # zpool replace omt-antlets sda4 sda5

invalid vdev specification

use '-f' to override the following errors:

/dev/sda5 is part of active pool 'antlets'

root@myantsle:~ # zpool replace omt-antlets -f sda4 sda5

invalid vdev specification

the following errors must be manually repaired:

/dev/sda5 is part of active pool 'antlets'General commands for update info:

root@myantsle:~ # lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda zfs_member antlets 16220368593226924245

├─sda1 vfat antlets DF73-335D /boot/efi

├─sda2 xfs antlets 73536ea9-6d0f-4f37-9b06-baf4744730fe /boot

├─sda3 xfs antlets 1a324b56-404f-4451-a490-dcce53f14a0b /

├─sda4 swap antlets 99fd53b1-ed55-438d-aae3-d2110379ae72 [SWAP]

└─sda5 zfs_member antlets 16220368593226924245

sdb

├─sdb1

├─sdb2 swap d5a07a24-a407-43c0-b7ab-9b9b2ad0e6ed

└─sdb3 zfs_member antlets 475084199677983392

root@myantsle:~ # zpool status

pool: antlets

state: ONLINE

scan: none requested

config:NAME STATE READ WRITE CKSUM

antlets ONLINE 0 0 0

sda5 ONLINE 0 0 0errors: No known data errors

pool: omt-antlets

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: http://zfsonlinux.org/msg/ZFS-8000-4J

scan: none requested

config:NAME STATE READ WRITE CKSUM

omt-antlets DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

5244363799507771969 UNAVAIL 0 0 0 was /dev/sda4

sdb3 ONLINE 0 0 0root@myantsle:~ # zfs list -o name,mounted,mountpoint | grep -v docker

NAME MOUNTED MOUNTPOINT

antlets yes /antlets

antlets/_backups yes /antlets/_backups

antlets/_templates yes /antlets/_templates

antlets/_templates/Blank yes /antlets/_templates/Blank

antlets/_templates/CentOS-7 yes /antlets/_templates/CentOS-7

antlets/_templates/FreeBSD yes /antlets/_templates/FreeBSD

antlets/_templates/debian yes /antlets/_templates/debian

antlets/_tmp yes /antlets/_tmp

omt-antlets no /antlets

omt-antlets/CAR-GATEWAY-1 no /antlets/CAR-GATEWAY-1

omt-antlets/CAR-GATEWAY-C2 no /antlets/CAR-GATEWAY-C2

omt-antlets/CAR-GW-3 no /antlets/CAR-GW-3

omt-antlets/Util no /antlets/Util

omt-antlets/Util2 no /antlets/Util2

omt-antlets/Util2-back no /antlets/Util2-back

omt-antlets/_backups no /antlets/_backups

omt-antlets/_templates no /antlets/_templates

omt-antlets/_templates/CentOS-7 no /antlets/_templates/CentOS-7

omt-antlets/_templates/FreeBSD no /antlets/_templates/FreeBSD

omt-antlets/_templates/Ubuntu16.04 no /antlets/_templates/Ubuntu16.04

omt-antlets/_templates/WagoTemplate.kvm no /antlets/_templates/WagoTemplate.kvm

omt-antlets/_templates/Win10 no /antlets/_templates/Win10

omt-antlets/_templates/Win10-Spice no /antlets/_templates/Win10-Spice

omt-antlets/_templates/Win2012 no /antlets/_templates/Win2012

omt-antlets/_templates/debian no /antlets/_templates/debian

omt-antlets/_templates/ubuntu-xenial no /antlets/_templates/ubuntu-xenial

omt-antlets/_tmp no /antlets/_tmp

omt-antlets/gate-1-backup no /antlets/gate-1-backup

Here is where I currently am: New OS showing the docker failed. Wound up rebuilding OS drive again.

Original antlets drive died, so I wound up recovering from a backup to a new drive. Name change of antlet is no longer in place.

I ran some commands below so you can see what I have. At this point, it looks like I just need to get the omt-antlets mirror rebuilt. It exsits on the sdb3 drive, and I want it to mirror into the sda5.

Here is what I tried, and the responses.

root@myantsle:~ # zpool import antlets

cannot import 'antlets': a pool with that name already exists

use the form 'zpool import <pool | id> <newpool>' to give it a new name

root@myantsle:~ # zpool replace omt-antlets sda4 sda5

invalid vdev specification

use '-f' to override the following errors:

/dev/sda5 is part of active pool 'antlets'

root@myantsle:~ # zpool replace omt-antlets -f sda4 sda5

invalid vdev specification

the following errors must be manually repaired:

/dev/sda5 is part of active pool 'antlets'

General commands for update info:

root@myantsle:~ # lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda zfs_member antlets 16220368593226924245

├─sda1 vfat antlets DF73-335D /boot/efi

├─sda2 xfs antlets 73536ea9-6d0f-4f37-9b06-baf4744730fe /boot

├─sda3 xfs antlets 1a324b56-404f-4451-a490-dcce53f14a0b /

├─sda4 swap antlets 99fd53b1-ed55-438d-aae3-d2110379ae72 [SWAP]

└─sda5 zfs_member antlets 16220368593226924245

sdb

├─sdb1

├─sdb2 swap d5a07a24-a407-43c0-b7ab-9b9b2ad0e6ed

└─sdb3 zfs_member antlets 475084199677983392

root@myantsle:~ # zpool status

pool: antlets

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

antlets ONLINE 0 0 0

sda5 ONLINE 0 0 0

errors: No known data errors

pool: omt-antlets

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: http://zfsonlinux.org/msg/ZFS-8000-4J

scan: none requested

config:

NAME STATE READ WRITE CKSUM

omt-antlets DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

5244363799507771969 UNAVAIL 0 0 0 was /dev/sda4

sdb3 ONLINE 0 0 0

root@myantsle:~ # zfs list -o name,mounted,mountpoint | grep -v docker

NAME MOUNTED MOUNTPOINT

antlets yes /antlets

antlets/_backups yes /antlets/_backups

antlets/_templates yes /antlets/_templates

antlets/_templates/Blank yes /antlets/_templates/Blank

antlets/_templates/CentOS-7 yes /antlets/_templates/CentOS-7

antlets/_templates/FreeBSD yes /antlets/_templates/FreeBSD

antlets/_templates/debian yes /antlets/_templates/debian

antlets/_tmp yes /antlets/_tmp

omt-antlets no /antlets

omt-antlets/CAR-GATEWAY-1 no /antlets/CAR-GATEWAY-1

omt-antlets/CAR-GATEWAY-C2 no /antlets/CAR-GATEWAY-C2

omt-antlets/CAR-GW-3 no /antlets/CAR-GW-3

omt-antlets/Util no /antlets/Util

omt-antlets/Util2 no /antlets/Util2

omt-antlets/Util2-back no /antlets/Util2-back

omt-antlets/_backups no /antlets/_backups

omt-antlets/_templates no /antlets/_templates

omt-antlets/_templates/CentOS-7 no /antlets/_templates/CentOS-7

omt-antlets/_templates/FreeBSD no /antlets/_templates/FreeBSD

omt-antlets/_templates/Ubuntu16.04 no /antlets/_templates/Ubuntu16.04

omt-antlets/_templates/WagoTemplate.kvm no /antlets/_templates/WagoTemplate.kvm

omt-antlets/_templates/Win10 no /antlets/_templates/Win10

omt-antlets/_templates/Win10-Spice no /antlets/_templates/Win10-Spice

omt-antlets/_templates/Win2012 no /antlets/_templates/Win2012

omt-antlets/_templates/debian no /antlets/_templates/debian

omt-antlets/_templates/ubuntu-xenial no /antlets/_templates/ubuntu-xenial

omt-antlets/_tmp no /antlets/_tmp

omt-antlets/gate-1-backup no /antlets/gate-1-backup

Quote from daniel.luck on November 9, 2022, 6:29 pmHi @somewhocallmetim

From looking at the results, it appears that you have two zpools with the same name "antlets".

In this case, we have to export the zpools and then import the other one with different name.

First we have to stop antMan:

systemctl stop antmanTo export the zpools:

zpool export -aTo list the zpools:

zpool importTo import the specific zpool:

zpool import <id>To import the other with different name:

zpool import antlets antlets_newnameSee attached screenshot for details. I didn't have two zpools with the same name but I renamed antlets-old to antlets2.

Thanks,

antsle Support

From looking at the results, it appears that you have two zpools with the same name "antlets".

In this case, we have to export the zpools and then import the other one with different name.

First we have to stop antMan:

systemctl stop antmanTo export the zpools:

zpool export -aTo list the zpools:

zpool importTo import the specific zpool:

zpool import <id>To import the other with different name:

zpool import antlets antlets_newname

See attached screenshot for details. I didn't have two zpools with the same name but I renamed antlets-old to antlets2.

Thanks,

antsle Support

Quote from SomeWhoCallMeTim on November 10, 2022, 4:07 amResponse when trying to export:

root@carantsle1:~ # zpool export -a

umount: /var/lib/docker: target is busy

(In some cases useful info about processes that

use the device is found by lsof(8) or fuser(1).)

cannot unmount '/var/lib/docker': umount failed

Response when trying to export:

root@carantsle1:~ # zpool export -a

umount: /var/lib/docker: target is busy

(In some cases useful info about processes that

use the device is found by lsof(8) or fuser(1).)

cannot unmount '/var/lib/docker': umount failed

Quote from SomeWhoCallMeTim on November 10, 2022, 6:17 amI just purchased a Grow Plan, and have submitted ticket 12403.

@daniel-luck, thank you for your assistance! I need some priority support on this one so I can get our systems back up and running ASAP.

I just purchased a Grow Plan, and have submitted ticket 12403.

@daniel-luck, thank you for your assistance! I need some priority support on this one so I can get our systems back up and running ASAP.

Quote from daniel.luck on November 10, 2022, 11:23 amHi @somewhocallmetim

Thanks for the update.

I will grab your ticket and work from there.Thanks,

antsle Support

Thanks for the update.

I will grab your ticket and work from there.

Thanks,

antsle Support